claude and google leaked some seo information. it’s full of gold and implications for search and getting your product/service found online. it’s so crucial i made a long guide and a quick cheat sheet for everyone. cook these into your content strategies to be picked up by llms.

now if you’re interested…let’s dive a bit deeper together:

2 pivotal pieces came out

we're in 'ai mode' now... search is no longer a human typing a keyword and clicking a link. these are some of the implications of llms taking over the 'consideration' and potentially the 'purchase' parts of the customer journey.

the next era of ux won’t be for people. it’ll be for agents

why? because the user doesn’t care where content comes from as long as they get viable answers.

also why? well because designing interfaces, copy, and flows for human attention made sense, until the attention moved

and it moved to bots…

search is no longer a human typing a keyword and clicking a link

in 'ai mode', it’s a language model fanning out a query into 50 latent intents, retrieving semantically rich passages, synthesizing answers, and returning a response the user never sees your page in

you’re not designing for users anymore. you’re designing for interpreters.

this changes a lot

→ your "user" is an agent that reasons in chains

→ your "journey" is a fan-out of 100 sub-queries

→ your "conversion" happens without a click

→ your "visibility" depends on citation, not rank

so what does ux for agents look like?

→ passages that are semantically complete in isolation

→ clear comparisons and structured tradeoffs

→ named entities that map to the knowledge graph

→ factual, verifiable data that earns citations

→ modular, remixable content chunks (lists, tables, TL;DRs)

→ multimodal formats: text + video + audio + imagery

→ answer-first phrasing optimized for recomposition

→ vector similarity > keyword density

this is what relevance engineering looks like in practice. not optimizing for ranking, but for reasoning. not writing for users, but for interpreters that represent users.

in this new world: the future of ux is agent experience. and the best seos are already making the leap. kinda creepy sure…

my tips on tools to get started:

→ profound (agent visibility tracking)

→ qforia (fan-out query simulation)

→ surfer ai (semantic content structuring)

→ clearscope (passage-level optimization)

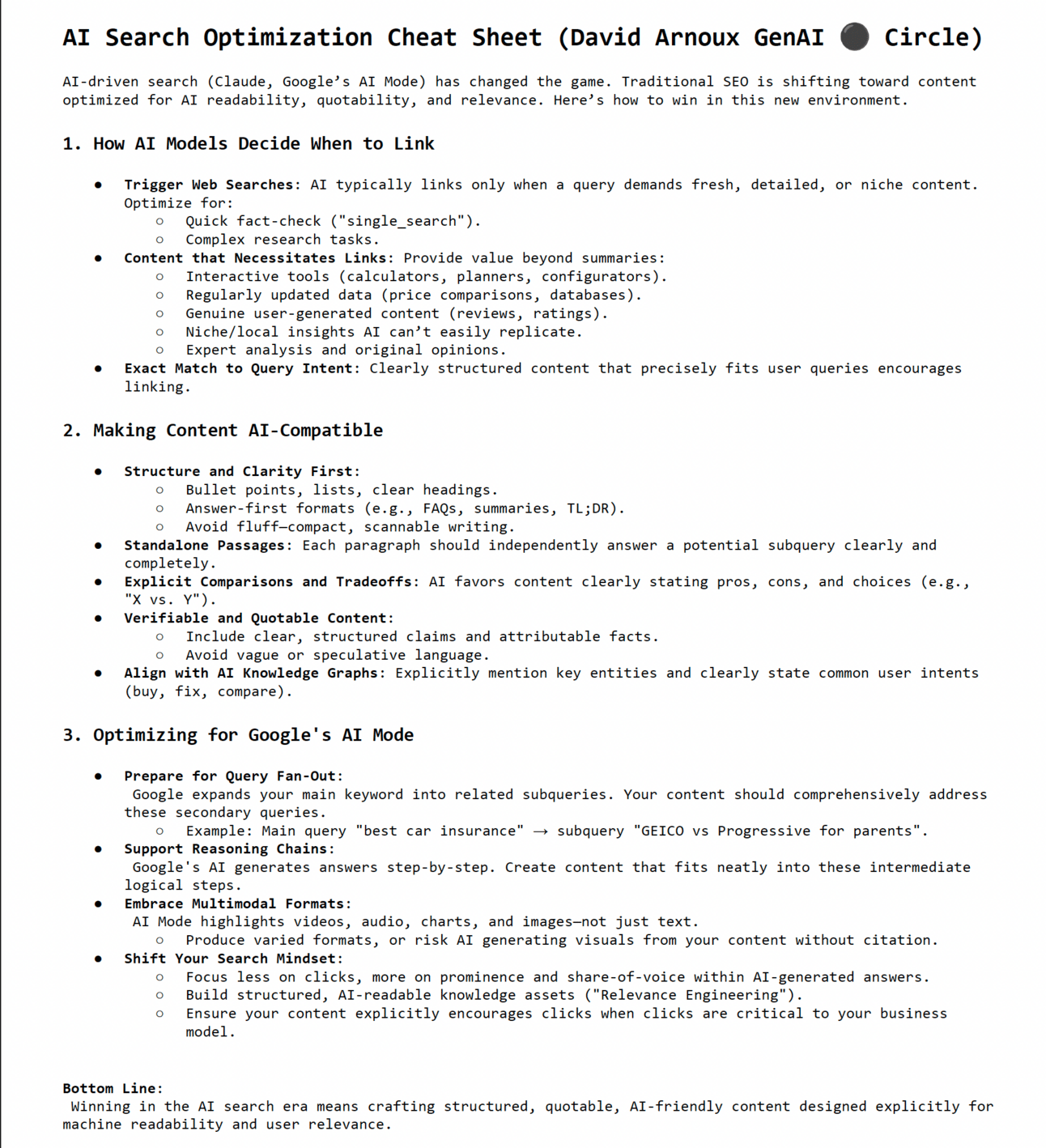

→ claude only triggers web search when its memory hits a wall: either for fast facts ("single_search") or complex tasks ("research"). that's when your content might get pulled in and shown.

→ there are just four search modes hardwired into it: never_search, do_not_search_but_offer, single_search, research. this decides if and how it uses tools.

→ claude links only if your content fits the query like a glove, isn’t already in its memory, and is well-structured. bonus points if it’s interactive or unique: not just brand fluff.

→ no copy-paste - claude avoids quoting more than 20 words in a row from any external site. it paraphrases instead. it won’t cite everything either.

→ seo is mutating. the new game is llm citation optimization - write to get cited, not ranked. clean structure, sharp facts, and stuff it can't just "know" already.

bonus

i also shared a bunch of tips and tricks i learned from jan-willem bobbink a few months ago, many still hold true

here’s what’s changing:

→ navigational searches ("xxx website"): these still belong to search engines. people know where they’re going.

→ informational searches ("signs of skin rash"): these are moving fast toward llms. why scroll through 10 links when ai gives the answer instantly?

→ google giving more and more real estate to ai answers means fewer clicks on links. a lot fewer.

→ commercial mid-funnel ("best x for y"): this is the battleground. llms are primed to dominate.

overall, llm usage is still limited but growing, and it depends on whether you’re an intent or a "discovery" product/service.

lower-funnel searches ("buy x, y, z") will stay with google and amazon. direct intent means direct action.

tips

→ listicles, listicles, listicles!

→ pr and brand mentions matter more than mass backlinks (try out mvpr).

→ citations and brand mentions by established sources are key. models need to verify data points. this isn’t new, but it’s more important now.

→ are we back to 50–80s marketing? build brand, get mentioned.

→ citations where? examples: openai partnerships with hearst, lemonde, prisa, voxmedia, condé nast, the atlantic, gedi, newscorp, and time. also, perplexity’s publisher program has partnerships with time, entrepreneur, the texas tribune, and der spiegel.

→ social platforms (reddit, medium) drive fresh link juice that google values more than static backlinks. be everywhere.

→ quality over quantity is back. big brands show up more, yes, but lower in rankings.

→ an llms.txt file might become a thing.

the way people discover products, learn, and make decisions is being rewritten. llms will become the default for nuanced, context-driven queries. others (like my uncle) will stick to what they know for the next 20 years.

oh, and what will the impact of voice be?

winners? it seems established brands have an edge, but for new players the same rules still hold: invest in seo (it never dies). it’s a marathon with huge long-term roi.

invest in quality and quantity of content + get cited (pr).

stay relevant.

that’s it’s

this space is moving so fast… we’re back to a different type of seo. we’re optimizing for agents.

exciting gtm times ahead.

⬛️

ps… further reading to go even deeper

https://wordlift.io/blog/en/query-fan-out-ai-search/

and

https://ahrefs.com/blog/generative-engines-are-breaking-web-analytics/

👋 by the way, we run a community called genai ⚫️ circle. it’s where i learn everything i share. it’s invite-only. as a subscriber of this newsletter you can apply to join. check it out here: www.thegenaicircle.com

other posts you might have missed