llms are making us lazier, dumber and more creatively homogenous (so less creative). no time to read? watch this video where i dive into the research + tips on how to fight cognitive decline, check it out here:

are llms making your brain lazy? yes.

have you ever thrown a trivial task at an llm, one you could do faster by hand, just because it was easier to prompt?

like asking claude to write an email subject line or chatgpt to find you some basic synonyms or alphabetize a list of five items. asking ai to count the number of words in a paragraph you just wrote. stuff you could do in your head in two seconds, but you’d rather prompt anyway.

i caught myself asking claude to capitalize the first letter of each word in a title yesterday. literally just hitting shift five times. but i wrote a prompt instead.

what does the research say? let’s go deep, but first a summary if you don’t have time:

do read on, it gets more interesting:

wharton tracked students for months and the results are bad

wharton business school just published something that made me close my laptop and stare at the wall for a bit (that’s not a true story… but it creates dramatic effect).

so basically they tracked students using chatgpt for math homework. during practice sessions with ai, students performed 48% better. makes sense. ai helps, grades go up.

but when exam time came and students couldn't use ai? they scored 17% worse than students who never used ai at all. not just worse than their ai-assisted performance. worse than kids who struggled through homework without help.

the ai didn't just fail to help them learn. it made them worse at math. btw the researchers ran it again because they didn't believe it. same results.

ok next study…

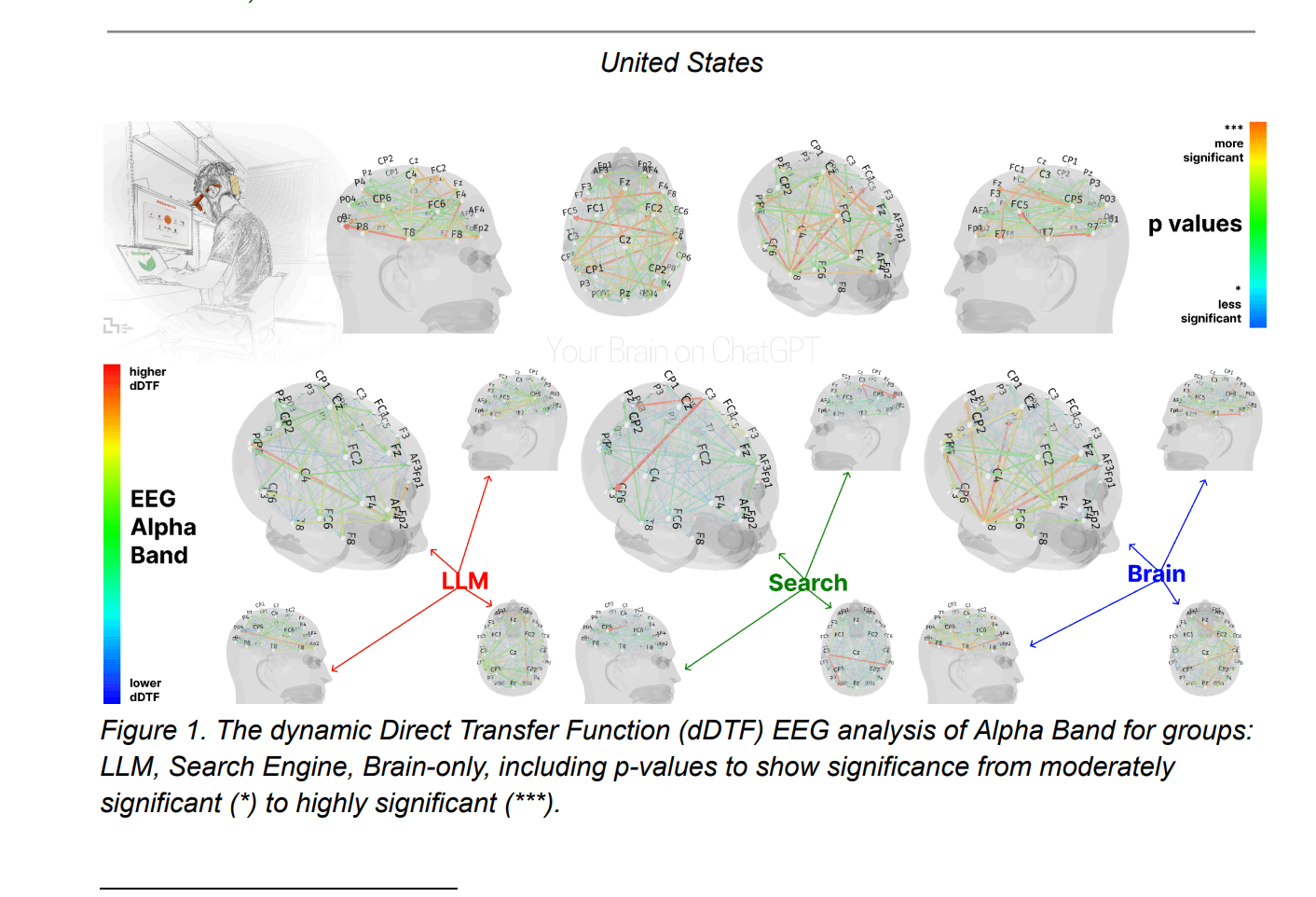

mit scanned brains and found something worse

mit media lab went further. hooked up 54 people to eeg machines while writing essays.

the chatgpt users showed the weakest connectivity patterns. the least neural engagement. their brains were literally doing less work. you could see it on the brain scans. neurons barely firing.

after four months they "consistently underperformed at neural, linguistic, and behavioral levels." every metric got worse over time. not plateaued. got worse.

the lead researcher was so concerned she released it before peer review. she said "i am afraid in 6-8 months, there will be some policymaker who decides, 'let's do gpt kindergarten.' i think that would be absolutely detrimental."

they call it [cognitive offloading] and apparently it’s melting our brain

germany ran a study in 2024. students using llms for research showed "significantly lower cognitive load." their brains worked less hard. sounds good until you read the next sentence.

despite reduced mental effort, these students showed "lower-quality reasoning and argumentation" compared to those using google. worse answers. worse logic. worse ability to build arguments.

this is cognitive offloading. your brain stops trying because it expects ai to handle it.

a 2025 study with 666 participants found "a significant negative correlation between frequent ai tool usage and critical thinking abilities." more ai use equals worse thinking. that's it.

researchers are calling it "metacognitive laziness." your brain's ability to think about thinking starts dying. you stop questioning. you stop analyzing. you just prompt and paste.

stanford's ai index 2024 confirms it. ai makes tasks easier but fundamentally changes how we engage with problems. and not in a good way.

and the last one! (promise)

microsoft research found higher confidence in ai correlates with less critical thinking. the more you trust it, the less you think.

ok so we’re getting dumber…check ✔️.

what about creativity in all this?

everyone's creativity looks identical now

science advances published something weird in 2024. yes, individuals using ai wrote more "creative" stories according to judges. but the diversity of ideas across the group crashed.

summary: everyone's creativity converged on similar patterns. same metaphors. same structures. same everything.

read the paper here

here’s a few more i found and skimmed

nature published supporting evidence. ai-assisted creative work shows "convergence patterns" across different users.

wharton found 94% of ideas from chatgpt users "shared overlapping concepts." nine different people named their toy invention "build-a-breeze castle." independently. nine people. same name.

one researcher said "the true value of brainstorming stems from the diversity of ideas rather than multiple voices repeating similar thoughts."

students know they're getting dumber

harvard graduate school of education documented it. students use ai for homework completion, not learning. they know it. they do it anyway. and so do we right?

meta-analysis of 51 studies in 2025. chatgpt has "large positive impact on improving learning performance" but only "moderately positive impact" on actual learning and higher-order thinking.

performance goes up. understanding goes down. every single study shows this pattern.

i shared this one a while back: ghana ran a controlled study. students using chatgpt showed changes in critical, reflective, and creative thinking. mostly negative changes.

another systematic review confirmed it. ai makes you feel smarter while making you actually dumber?

researchers are kinda freaking out

mit's nataliya kosmyna: "developing brains are at the highest risk."

stanford and mit productivity study found ai helped workers finish tasks 14% faster. but only novices saw big gains. experts barely improved. because experts still knew how to think.

nature published warnings about "artificial intelligence and illusions of understanding in scientific research." even scientists fool themselves.

ok ok great… that’s for the fear and utter despair. but how to tackle this?

what actually works according to research

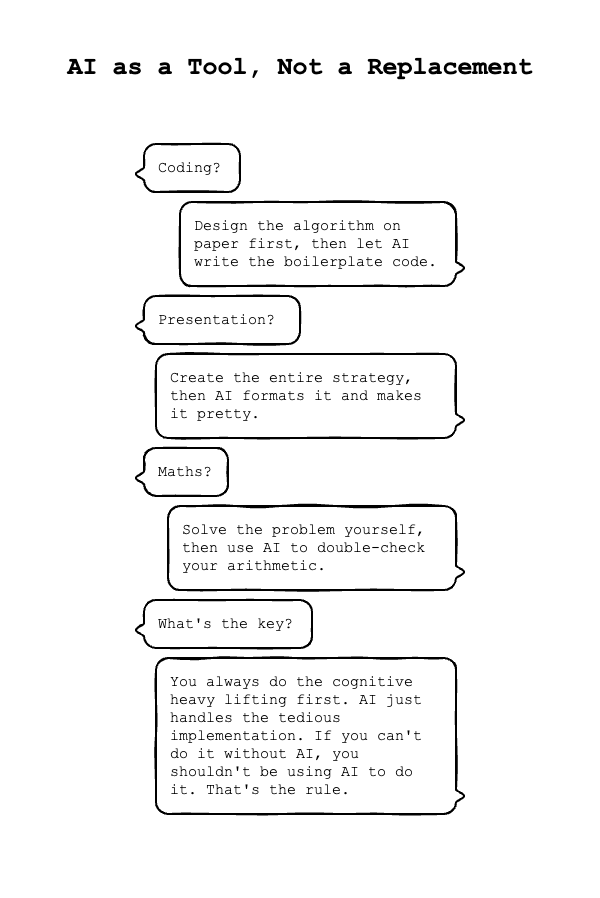

long story short, the damage isn't permanent if you change now. but you need to be strategic about how you use ai. researchers have tested different approaches and some actually preserve your cognitive abilities while still getting productivity gains. harvard and wharton developed what they call the centaur method. named after the mythological creature that's half human, half horse. you stay the human brain, ai becomes the workhorse. you do all the thinking and decision making. ai handles execution and grunt work. never flip this relationship.

what this looks like in practice:

coding? you design the algorithm on paper first, understanding each step and why it's needed. then you let ai write the boilerplate code.

presentation? you create the entire strategy for a presentation, outlining key points and arguments. then ai formats it and makes it pretty.

maths? you solve the math problem yourself, working through the logic. then use ai to double-check your arithmetic.

the key is you always do the cognitive heavy lifting first. ai just handles the tedious implementation. if you can't do it without ai, you shouldn't be using ai to do it. that's the rule.

mit researchers found something they call deliberate difficulty practice. before you touch any ai tool, you need to struggle with the problem for at least 20 to 30 minutes. actual struggle. not just staring at it. write out attempts. make mistakes. hit dead ends. your brain needs to feel the difficulty to maintain its problem-solving circuits.

here's their protocol that actually showed improved outcomes. write your expected answer or solution first, before asking ai anything. be specific about what you think the answer should look like. then prompt ai. but here's the critical part: after ai responds, you must identify at least three things it might be wrong about. force yourself to find potential errors or oversimplifications. this keeps your critical thinking engaged. one researcher described it as "thinking with ai versus letting ai think for you." massive difference in brain activation patterns between these two approaches.

the verification protocol comes from research on ai hallucinations and metacognition. never accept ai output without verification. sounds obvious but almost nobody does it.

the research found that people who verify ai outputs maintain better cognitive function than those who don't.

verification means actually checking primary sources, not just asking ai "are you sure?" it means testing edge cases yourself. running the code ai wrote with different inputs. checking the math by hand for at least one example. reading the original research paper, not just ai's summary. people who do this show no cognitive decline in studies. but it takes discipline because your brain wants to be lazy.

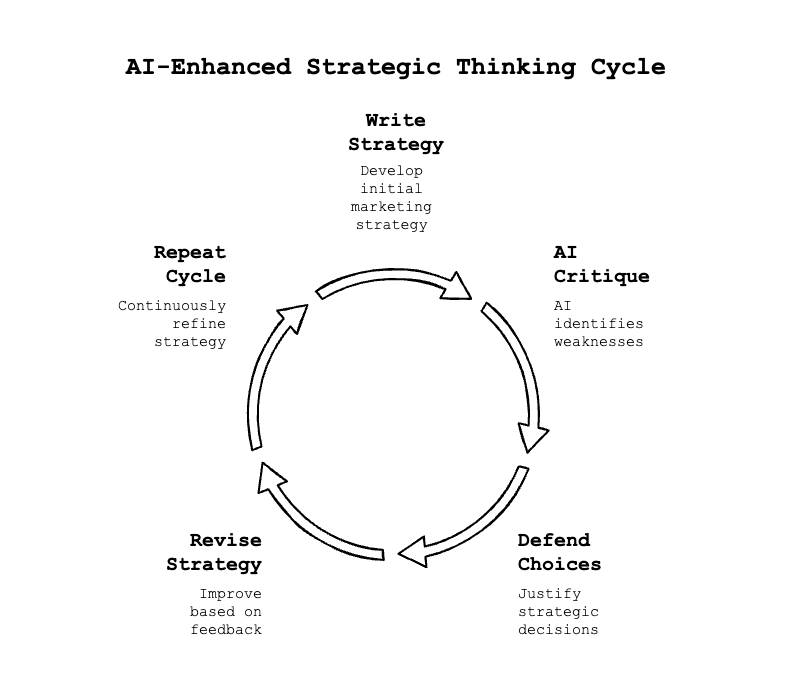

pnas published research showing that using ai as a "sparring partner" rather than an "answer machine" completely changes the cognitive impact. sparring means you argue with it. you challenge its logic. you ask it to defend its position. you propose alternative solutions and make ai explain why they won't work.

"thinking with ai versus letting ai think for you."

concrete example: instead of asking "write me a marketing strategy," you write your strategy first. then you tell ai "here's my marketing strategy. attack it. find every weakness." then you defend your choices. then you revise based on valid criticisms only. this maintains all the neural pathways involved in strategic thinking while still benefiting from ai's ability to spot patterns you might miss. studies show this approach actually improves cognitive function over time rather than degrading it.

academic research on metacognitive sensitivity found that maintaining human oversight is absolutely critical. but oversight doesn't mean just reviewing. it means active engagement at every step.

the most effective protocol they found: break any task into chunks. do the first chunk yourself completely. use ai for the second chunk but modify its output substantially. do the third chunk yourself again. this cycling between human and ai work keeps your brain engaged. people using this method showed no cognitive decline after six months of heavy ai use. some even showed improvement in problem-solving scores.

there's also timing that matters. research shows using ai at the beginning of a task causes the most cognitive damage. using it at the end for polish and refinement shows the least impact. so if you're writing an article, write the entire first draft yourself. struggle through it. only then use ai for editing suggestions. if you're coding, build the entire logic yourself. only then use ai for optimization. if you're solving a problem, find the solution yourself. only then use ai to stress-test it.

the research is incredibly consistent on one point. the moment you let ai do the thinking for you, your brain starts to atrophy. but if you maintain the cognitive load and use ai just for leverage, you can get massive productivity gains without losing your ability to think. it just requires discipline that almost nobody has right now.

obviously, tech companies want you dependent

they measure "cognitive offload rate" as a success metric. your dependency is their business model.

research is clear. more ai for thinking tasks means less neural engagement. students using ai for homework train their brains not to think. workers using ai for problem-solving lose ability to solve problems. everyone using ai for creativity makes the same stuff.

npr: "amateur writers using ai tools produced stories that were deemed more creative, but the research suggests the creativity of the group overall went down." you can keep using ai as a crutch and watch your thinking abilities decline. the research proves it's happening. brain scans show it. test scores confirm it.

or use ai strategically. amplify thinking instead of replacing it. maintain struggle. preserve difficulty. keep your brain engaged.

your brain is your only real competitive advantage. not prompting skills. not ai subscriptions. your actual ability to think.

the studies are unanimous.

and it’s as simple as this: use it or lose it.

coz it's not irreversible.

yet.

⬛️

👋 by the way, we run a community called genai ⚫️ circle. it’s where i learn everything i share. it’s invite-only. as a subscriber of this newsletter you can apply to join. check it out here: www.thegenaicircle.com

and

other posts you might have missed